REPORT HOME > World Building

October 2023 | technology report | military Training

A powerful image generator is at the heart of any truly realistic simulation system, and the possibilities opened up by the technology are driving training innovation for armed forces worldwide.

Above: An F-16 3D real-time entity seen ‘in flight’ over VRSG’s CONUS NAIP terrain. (Image: MVRsimulation)

Simulation can be simply defined as the imitation of a system or environment in order to support training or experimentation. Naturally, the more accurate and realistic the simulation, the more useful it will be to its users.

In the case of military training, a high level of realism leads to a genuine suspension of disbelief for the trainee, and more effective immersion in the simulated environment and greater engagement with the scenario.

This level of realism is heavily dependent on the capability of the image generator (IG) underpinning the simulation. Currently, there are significant changes under way in the IG marketplace as it applies to defence training and simulation.

Game engines such as Unreal and Unity, which benefit from huge investment by the gaming industry, have entered the defence world and are now being utilised for military simulation, while other longstanding players (most recently Presagis) have departed.

Those solutions that have persisted – and succeeded – in the market are marked out in several ways; namely, their useability, flexibility and ability to evolve as new customer requirements emerge.

Complete package

One distinguishing factor between different IGs is the extent to which they offer a complete solution to the user. Some may only provide scene-generation software, without content, requiring the customer to purchase terrain and 3D models from a third party. Others may not provide a complete standalone IG application, requiring the customer to build a full solution based on the vendor's provided API, requiring in-house software development expertise.

Others meanwhile may provide a complete solution which requires minimal intervention by the user. The approach of MVRsimulation falls into the latter category and the company has been operating in the military IG marketplace for over 20 years with its Virtual Reality Scene Generator (VRSG).

VRSG is a Microsoft DirectX-based render engine that provides geo-specific simulation as an IG with game-quality graphics. It enables users to visualise geographically expansive and detailed virtual worlds in real-time, interactive frame rates on commercially available PCs, using Microsoft standards.

As a DIS (Distributed Interactive Simulation)-based solution, VRSG is fully interoperable with other compliant applications through DIS itself or the Common Image Generator Interface (CIGI).

Furthermore, as an execution-ready render engine, VRSG supports but does not require programming for use. Configuration files and interface protocols provide users with the ability to control basic components of the engine, while developers can use the plugin interface to augment its functionality with their own features.

Garth Smith, president and co-founder of MVRsimulation, told Shephard that the company’s goal has been to provide a solution where image generation, terrain and models all work ‘straight out of the box’ without further development, enabling customers to concentrate on controlling the IG with the simulation host. VRSG can also provide deeper user development capability where required.

Smith said that MVRsimulation had concentrated on developing an interface to control the IG, originally using its own protocol, but then moving to CIGI when this was available.

He explained that VRSG originated in the late 1990s as a concept to develop dynamic terrain with an early focus on PC graphics. This was coupled to a desire to create a more open market and provide a more responsive service to customers.

It had become apparent that accurate terrain correlation was important, and Smith said that MVRsimulation was one of the first to identify this and introduce the technology needed. Its early database construction was based on ModSAF (Modular Semi-Automated Forces) terrain, but this was unsatisfactory. The terrain tools existing at the time had limitations, he said, and were in an inefficient format ‘so we decided to develop our own’.

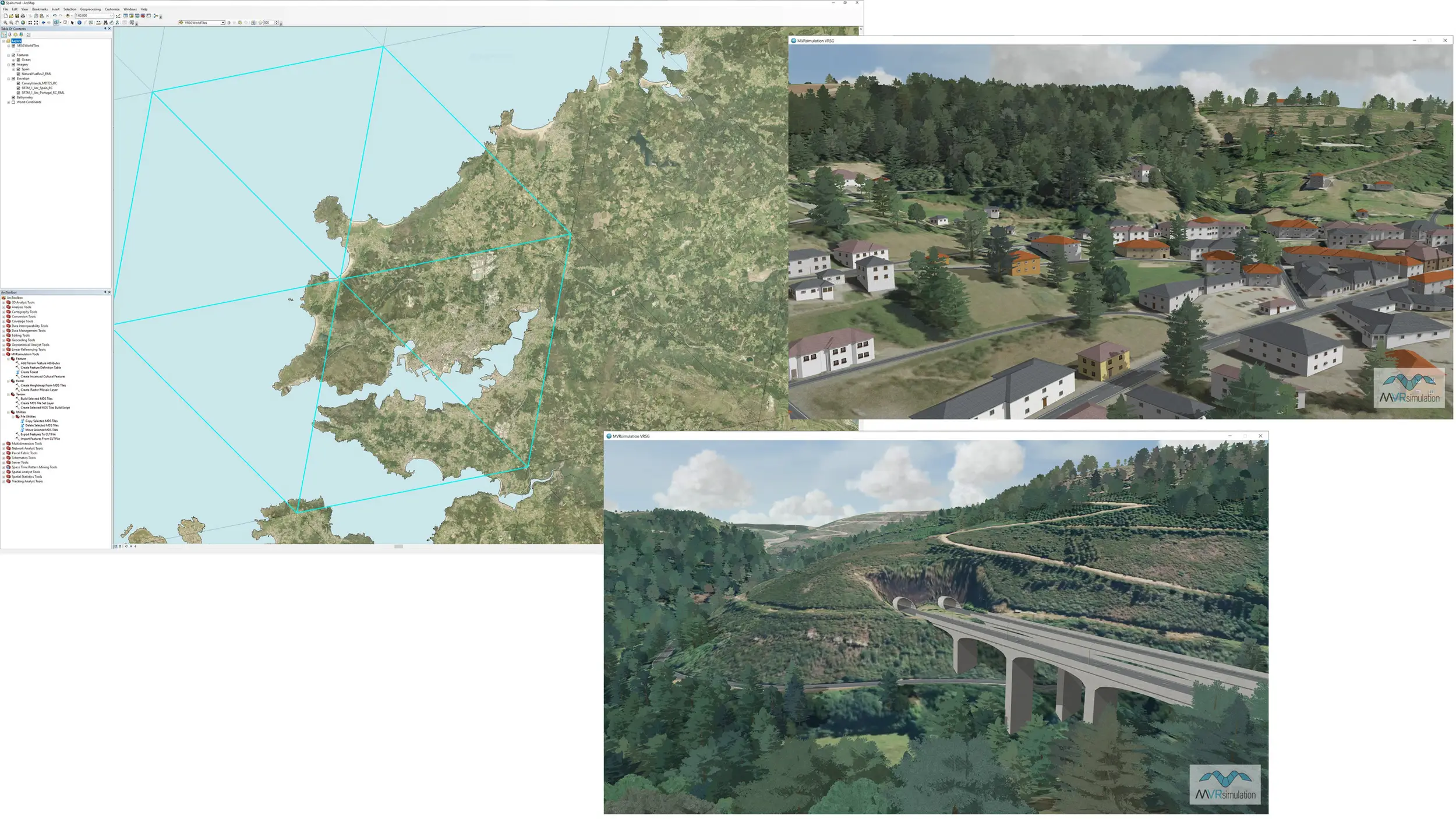

What emerged was MVRsimulation’s Terrain Tools plugin, designed to leverage Esri software as its GIS data foundation. ‘Leveraging Esri’s vast infrastructure and building our own value-adding plugin on top of it gave us access to commercial open GIS data standards and allowed us to focus on developing the runtime-optimised format used by our image generator,’ Smith said.

MVRsimulation’s Terrain Tools plugin for Esri’s ArcGIS software uses elevation points and geo-specific imagery as source data, which enables users to turn their geospatial data into real-time 3D terrain to render within VRSG. ‘More recently, the Esri CityEngine plugin enables our users to augment our artist-rendered 3D building content with very large volumes of good-quality building content with architecturally relevant textures using Open Street Map (OSM) data for proper geo-referencing,’ Smith said.

This is intended to give users as much control over terrain construction as possible, and can be easily understood by anyone with a comprehension of geospatial data concepts and some experience with ArcGIS.

Above: MVRsimulation's Terrain Tools for Esri ArcGIS, showing a map of northwest Spain; and a VRSG rendering of the resulting geo-specific 3D terrain outside Pedrafita do Cebreiro along the A6 highway of Lugo province. (Image: MVRsimulation)

MVRsimulation also uses terrain data from as many other sources as possible, including licensing imagery from commercial providers: ‘We take the imagery, convert it into our very optimised format and redistribute it.’

Smith added that MVRsimulation does not use specialised terrain formats as terrain source inputs, and instead supports GIS open standards. VRSG can support external conversion utilities where needed, but ultimately ‘government-mandated formats controlled by a chosen vendor are not necessary – you get far superior performance by going to the source data’.

‘By operating a business model where we use redistributable imagery compiled into our runtime format and add highly specific value to produce content, we oversee the entire terrain ecosystem for our IG product – this gives us accountability and a single point of contact for our customers,’ Smith said. ‘We then continue to work closely with them, building new capability, terrain and models to support them as their training mission develops.

‘Ultimately, we want customers to think of us as part of their development staff – if a customer wants any particular feature added they only have to ask.’

He added that this work is carried out free of charge for VRSG users with active software maintenance, as long as it is in line with the company's product development – and the vast majority is.

User experience

The result of this approach is an extensive pre-existing terrain database that can be added to by the user to meet their own requirements. The current VRSG database includes 3D terrain for most of the world, covering the continental US plus Alaska and Hawaii (CONUS++), and the continents of Africa, Asia, Australia and Oceania, Europe and North and South America, in its round-earth geocentric terrain format.

Each dataset includes high-resolution insets of airports, airfields and training ranges, plus 3D replicas of urban environments, which can be geo-typical, geo-specific or a mixture of the two.

For the former type, Esri’s CityEngine can quickly generate high-resolution datasets comprising geo- and culture-typical buildings to populate terrain built in Terrain Tools.

These procedural 3D urban models are assembled from building footprints and road vectors derived from OSM data, which are extruded and textured from it using computer-generated architecture (CGA) rule files. A single such rule file can be used to generate many 3D models using feature attribute information stored in the OSM data.

Richard Rybacki, MVRsimulation co-founder and chief technology officer, said that before this ‘we had to build these areas by hand, but now the tool does it for us and it saves a significant amount of time’.

Nonetheless, select areas of high-resolution inserts are still built by hand, where doing so will add more value for the user than the CityEngine procedural models.

‘CityEngine is great for adding large amounts of culture to an area of interest very quickly but it can lack the ability to create unique geo-specific models which are often better when they are constructed by a 3D artist using traditional modelling software. Runway models are another type of geo-specific model that can be created in the same way,’ Rybacki said.

‘For example, in our Yemen terrain, we have several thousand buildings constructed from a CGA rule file which is capable of building up lots of 3D content instantly, but it lacks the ability to create unique landmark buildings or more intricate models quickly using traditional 3D modelling software.

‘So, in the Aden terrain model our 3D artist added some realistic geo-specific models which helped to enhance the terrain. These were models that CityEngine could not easily replicate, because creating a rule file for one unique landmark building doesn’t scale well. One of these unique models is Big Ben Aden which is a large clock tower. Another is a shipping crane which is definitely a more complicated model.’

Whether this degree of detail is required by the user is dependent on the training that needs to be delivered. The higher level of fidelity might not be required to support fast jet air-to-air combat training at altitude, but for air-to-ground, other joint or ground-based training, a hyper-realistic virtual environment will add significant value.

Above: VRSG’s Hajin, Syria terrain was built in Terrain Tools for Esri ArcGIS from geo-specific high-resolution imagery and elevation source data. (Image: MVRsimulation)

Expanding horizons

Critically, there are some training applications which require vast areas of high-fidelity terrain, both in the air and at ground level, notably close air support (CAS), joint fires and joint terminal attack controller (JTAC) simulation training. VRSG is particularly effective in supporting these applications.

What heightens realism for these applications is MVRsimulations’s round-earth terrain format, which enables the terrain to model the earth to a high degree of accuracy over its entire surface. This accuracy is vital for targeting applications and determining intervisibility.

For example, in joint training missions, such as CAS or forward observer scenarios incorporating a JTAC trainee on the ground and a pilot in a networked aircraft simulator, VRSG renders the shared virtual world from the same terrain so that all operators experience the same high-resolution simulation all the way to the horizon.

The patented terrain architecture represents the earth’s surface in a geocentric co-ordinate system, which accurately depicts the curvature of the planet and handles ordinate axis convergence at the poles. This approach solves a variety of problems associated with projection-based, monolithic visual databases, so improvements can be realised in database production, distribution, storage and updating; there are also many runtime and mission function benefits.

‘The result is that VRSG provides enormously detailed terrain and very expansive areas which you can fly over in a simulation at high speed, or move through on the ground at walking pace,’ Rybacki said.

‘When engaged in a mission such as target acquisition between a JTAC and CAS pilot, this is a differentiator in terms of matching the real-world operating environment, where map co-ordinates, landmarks and even the 3D model target’s appearance in the scenario are critical in delivering effective training.’

Above: A VRSG close air support training scene showing the JTAC and an A-10 3D entity in the distance. (Image: MVRsimulation)

The ability to render terrain at extremely high frame rates has also opened the door to increasing use of mixed-reality head-mounted displays (HMDs) such as the Varjo XR-3 and thus a new generation of VRSG users.

‘This type of user needs more than nice-looking terrain, they need the 90Hz refresh rate, the 200Hz eye tracking with sub-degree accuracy, and one-dot calibration for foveated rendering, which is a technique that reduces the rendering workload by greatly reducing the image quality in the wearer’s peripheral vision as they move their head,’ Rybacki said.

‘They can also benefit from VRSG’s ability to visualise eye-tracking data, either for use in real-time simulation or to review in an after-action debrief. But while working on these emerging requirements, we also continue to support the requirements of our long-term users, many of whom have been using VRSG for multiple decades,’ he added.

RPAS revolution

Another application that needs to cover large areas with different simultaneous viewpoints, and reflect the presence of multiple sensors, is simulator-based RPAS or UAS training. Here, VRSG can create and display multiple viewports, or concurrent views, where each can be assigned to a different view of the scene.

Multiple viewports can overlap in a picture-in-picture arrangement, or be spatially apart in a picture-by-picture arrangement. Many VR and mixed-reality devices require at least two viewports (one for each eye) or four (two for each eye), depending on the manufacturer.

‘For RPAS applications you need to be able to simulate sensor and nose camera video, and transmit digital video to stimulate tactical display systems in ground control stations (GCS),’ Smith noted. A GCS, such as the USAF’s MALET-JSIL Aircrew Trainer (MJAT), which is used to train pilots and sensor operators on the General Atomics MQ-9 Reaper, can also include devices like the Remotely Operated Video Enhanced Receiver (ROVER) commonly used by JTACs.

This simulation includes not only the imagery but also metadata embedded in the video stream, such as telemetry and location information.

Above: A real-time 3D MQ-9 Reaper entity in flight over VRSG’s CONUS NAIP Grand Canyon terrain. (Image: MVRsimulation)

To provide effective training a GCS simulator (or simulation embedded in a GCS) must provide the embedded metadata stream so that it can, for example, be displayed in overlays or extracted into a nine-line targeting message.

VRSG can generate both video stream and metadata in the key-length-value (KLV) encoding standard, as it does for MJAT. VRSG simulates the Reaper's camera payload by streaming real-time HD-quality H.264 video plus KLV metadata, allowing operators to train using the same hardware used to operate the actual aircraft, stimulating real ISR systems and interoperating with networked JTAC simulators.

Rybacki said that this is a key discriminator for VRSG compared to other IGs, particularly for distributed mission operations training, adding ‘you can’t send video to the network that doesn’t have the embedded digital data’.

Crowdfunded content

Another element of VRSG is a 3D library that includes nearly 10,000 real-time models of weapons, vehicles, munitions, equipment, cultural features and character entities, together with geo-specific buildings and vegetation.

Using VRSG’s Scenario Editor tool, users can populate 3D terrain with these models to create and edit real-time training and pattern-of-life scenarios for playback.

Rybacki noted that the library contains more than 90% of the models required by the US Combat Air Force Distributed Mission Operations (CAF DMO) list and 95% of those designated mandatory, the majority with articulated parts, damage states and accurate physics-based infrared/thermal signatures.

CAF DMO compliance enables distributed combat exercises worldwide, ensuring that all trainees experience consistent, accurate visual 3D models across all platforms engaged in live/virtual training, mission rehearsal and distributed training operations.

The model library is updated both through MVRsimulation’s own initiatives and at customer request. Rybacki explained that MVRsimulation retains intellectual property in the models, which enables it to make the library and updates available free of charge to any user with a VRSG licence. Specific requests from individual customers are thus provided to all users as part of the update process, in what Rybacki described as akin to ‘crowdfunded content development’.

Above: 3D real-time JLTV Rogue Fires models pictured in VRSG's virtual Kismayo, Somalia, terrain. (Image: MVRsimulation)

Continuing development efforts are focused on multiple new terrains, including a National Agriculture Imagery Program (NAIP) CONUS terrain. This, currently replacing VRSG’s existing CONUS terrain, provides aerial imagery of the entire US during the different agricultural seasons.

Built using NAIP 1m imagery and 10m elevation data from the National Elevation Dataset (NED), it will provide updated aerial views with much greater resolution of the topography of the country. Already available for download by existing users, the terrain will be demonstrated at I/ITSEC in November.

‘The demonstration will show a 100km far horizon and no terrain anomalies during a simulated Mach 1 flight of our fixed-wing Part Task Mission Trainer simulator, with views shown on a standard display and inside the XR-3 headset,’ Smith said. ‘It will really give attendees the opportunity to understand what sets our terrain apart from the rest of the military IG market.’