REPORT HOME > The important part

july 2023 | technology report | Military Training

As new possibilities open up, selecting when and where to use a part-task trainer instead of a more comprehensive and sophisticated solution is a key element of planning for operators.

Above: The latest generation of MR headsets used in part-task training can track where the trainee is looking, information that can be used for after-action reviews. (Photo: MVRSimulation)

Operating any sort of military platform consists of several different tasks, the combination of which produces an effective capability. For example, piloting an aircraft (taking off, landing and airborne manoeuvring) is a distinct, separate skill from use of the aircraft’s sensors and weapons systems, loading and unloading a cargo bay, or deploying and operating an in-flight refuelling system.

All of these, and many similar activities in all three of the traditional domains (air, ground and naval), are separate tasks within the overall operation of a platform, and so can be trained separately, using a part-task trainer (PTT).

One of many broadly similar definitions of a PTT is: a synthetic training device to provide training for specific and selected operational tasks without requiring the learner to practise all of the tasks which are normally associated with a fully operational environment.

One common use for a PTT is aircraft tactical training, where the requirement is to learn and practice how to use all fighting and sensing capabilities of the aircraft and its tactical employment both individually and as part of a package, not its flight capabilities.

This does not need the complete immersive experience of a full-mission simulator (FMS), with a large dome and motion platform. A physically simple simulator that replicates the aircraft’s relevant systems and can be networked with other devices will suffice.

The most flexible form of PTT is one that can represent a number of different platforms through use of adjustable hardware and soft representations of instrument panels. MVRsimulation’s Part Task Mission Trainer (PTMT), which can be configured for training on current third- and fourth-generation combat aircraft in service with NATO nations, is a good example of such a solution.

Scenarios are run on the Battlespace Simulations (BSI) Modern Air Combat Environment (MACE) software and MVR’s Virtual Reality Scene Generator (VRSG), 3D terrain and models. VRSG provides the real-time 3D out-of-the-window and sensor views while MACE is a physics-based engine for constructively generated forces (CGF) or semi-autonomous forces (SAF) with pulse-level fidelity when modelling the electromagnetic environment.

Getting physical

To provide some element of the physical experience of being in an aircraft, the internally developed PTMT is based on a welded aluminium cockpit shell which represents the part of the fuselage from the nose to just behind the cockpit seat. A 22in touchscreen monitor gives a representation of the cockpit instrument panels, and an audio headset provides radio communications utilising an Up Front Control Device built on BSI’s Device Simulation Framework and DIS-based radio communications.

This does not need the complete immersive experience of a full-mission simulator (FMS), with a large dome and motion platform. A physically simple simulator that replicates the aircraft’s relevant systems and can be networked with other devices will suffice.

The most flexible form of PTT is one that can represent a number of different platforms through use of adjustable hardware and soft representations of instrument panels. MVRsimulation’s Part Task Mission Trainer (PTMT), which can be configured for training on current third- and fourth-generation combat aircraft in service with NATO nations, is a good example of such a solution.

Scenarios are run on the Battlespace Simulations (BSI) Modern Air Combat Environment (MACE) software and MVR’s Virtual Reality Scene Generator (VRSG), 3D terrain and models. VRSG provides the real-time 3D out-of-the-window and sensor views while MACE is a physics-based engine for constructively generated forces (CGF) or semi-autonomous forces (SAF) with pulse-level fidelity when modelling the electromagnetic environment.

Getting physical

To provide some element of the physical experience of being in an aircraft, the internally developed PTMT is based on a welded aluminium cockpit shell which represents the part of the fuselage from the nose to just behind the cockpit seat. A 22in touchscreen monitor gives a representation of the cockpit instrument panels, and an audio headset provides radio communications utilising an Up Front Control Device built on BSI’s Device Simulation Framework and DIS-based radio communications.

Above: The physical element of the PTMT comprises a cockpit shell with some non-virtual controls such as a reconfigurable HOTAS stick. (Photo: MVRSimluation)

For the physical controls MVR has incorporated a commercial-off-the-shelf (COTS) Thrustmaster hands-on throttle-and-stick (HOTAS) unit that can be changed between side-stick and centre-stick positions depending on the aircraft type being simulated.

A HOTAS stick offers a number of buttons and switches to control different functions such as throttle, radio communications, targeting cursor or sensor pod, and although these vary slightly in their position between aircraft types, there tends to be a convention as to switch positioning. This means a pilot can adjust to the use of the stick in the simulator after very brief familiarisation; alternatively, the system can integrate a customer-supplied HOTAS.

BSI used its plugin system to build a HOTAS-controllable generic tactical display and up-front control display that allows trainees to exploit functions that they are used to, such as programming weapons, manipulating the entity display or entering freqencies in the radios.

The PTMT has been designed so it is light and easy to move. It weighs just over 200lb without electronics and can be transported in a single reusable crate or be trailer-mounted. However, Brian Young, MVR mechanical engineer, said it was envisaged that most customers would install the simulator in a fixed location.

Two computers run the different software packages (MACE and VRSG) and there are USB ports which allow customers to add additional capabilities. Pedals can also be added as another customer-selected option.

The environment outside the cockpit can be displayed in different ways. As originally developed, this could either be with a small dome or with a 34in, 3K resolution curved screen in front of the pilot. However, the latest version offers the Varjo XR-3 Focal Edition (FE) mixed-reality (MR) head-mounted display (HMD) that provides a 360° view of the outside environment for the wearer.

The HMD has a 115° horizontal field of view, ultra-low latency, dual 12-megapixel video passthrough at 90Hz with a depth of field of 30-80cms for MR, together with wearer eye tracking.

The XR-3 FE provides pilots with an immersive, all-round view of the outside world, and the MR capability enables them to see and touch all instrumentation in the cockpit.

This provides the best of both worlds, presenting a virtual environment and a similar visual experience as offered by an FMS at a fraction of the cost while still allowing the use of cockpit controls in a realistic way.

Nick Pinson, hardware engineer at BSI, said that ‘where the XR-3 really shines is in the visual range, five to ten nautical miles, such as if you are training and practising basic fighter manoeuvring (BFM) or perhaps carrier landings. You can’t do that with just a curved screen in front of you.’

He added that the XR-3 FE’s 90-frame-per-second rendering is crucial for mitigating motion sickness from the MR HMD. Meanwhile its four passes allow for a second small high-pixel-density viewport to be rendered for each eye, letting the wearer see more detail on geometry rendered in this viewport.

Above: Using the XR-3 FE headset can provide an equivalent experience to a full-motion simulator for many tasks, while costing much less. (Photo: MVRSimulation)

Garth Smith, president and co-founder of MVRsimulation, told Shephard that he considered the capability that the Varjo MR HMD offers has ‘changed the whole simulation environment’ and is ‘transforming PTT technology’.

Services rendered

VRSG is a Microsoft DirectX-based render engine and is the system’s image generator, creating the synthetic environment. Bert Haselden, MVR software engineer, explained that it is a distributed interactive simulation (DIS)-based application and is fully interoperable with other compliant applications through DIS or Common Image Generator Interface (CIGI). It has a whole-world terrain database and a large model library that is continually updated.

This library contains more than 90% of the models required by the US Combat Air Force Distributed Mission Operations (CAF DMO) list and 95% of those designated mandatory, the majority with articulated parts, damage states and accurate physics-based infrared/thermal signatures.

CAF DMO compliance enables distributed combat training exercises worldwide. The entire model library and terrain database are available to all customers holding a VRSG licence and a maintenance contract.

Haselden also noted that the eye tracking capability in the XR-3 FE has been integrated into VRSG so that the pilot’s gaze can be visualised, depicted for each eye independently as a colour-coded 3D cone, for after action review (AAR). This can help identify missed moments of attention to instrumentation or the direction of an important activity and provides objective and uncontestable data of what is otherwise a subjective assessment.

MACE is a physics-based CGF/SAF scenario generator. Pinson explained that it includes all emissions in the battlespace, covering the visual, radio frequency (RF), ultraviolet (UV) and infrared (IR) spectrums. ‘For example, we model the propagation of radar waves over the terrain and their interaction with it, to provide an accurate replication of performance.’

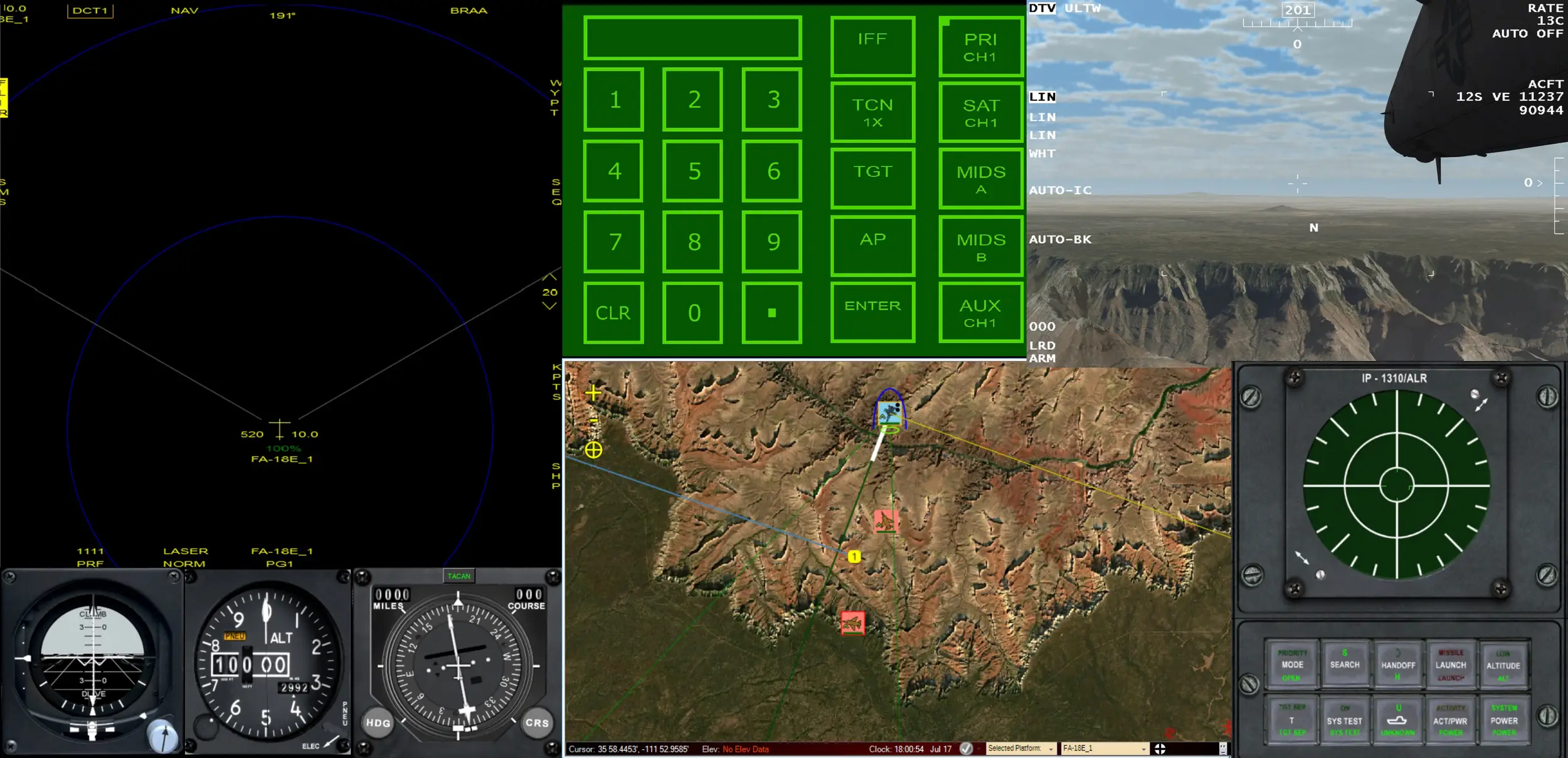

All tactical and avionics displays in the PTMT are generated by MACE, Pinson said. One key component is a tactical display which ‘shows the pilot’s radar tracks, the ability to lock those tracks and employ their weapons’.

It provides the interface to switch between the forward-looking IR (FLIR) and full motion video (FMV), and also shows the aircraft’s stores management system, the weapons carried and the programmed parameters within those weapons.

Another component is the upfront control device, which provides data entry and also handles the communications stack of up to five emulated radios: VHF/UHF, tactical data link and satellite communications. These are DIS-based devices using BSI’s Viper product and the stack enables the PTMT to ‘pretty much emulate any likely platform’, said Pinson. They can also support frequency hopping and encrypted communications.

Pinson emphasised that MACE is a simulation framework with a robust plug-in architecture, allowing it to be extended by the end user. For example, MACE will model the sensor and weapon capabilities of the specific platform being simulated and of other assets in the battlespace, but users can override the default data to tailor it to their needs or to the classification level at which they are operating. They can also write their own code and applications to address specific use cases and missions.

Above: A close-up of the PTMT cockpit control panel, which in this case is not aircraft specific. The flexibility of BSI MACE's multifunction display (MFD, upper left) enables the setup of various panels and training scenarios. The controls shown here include MACE's up front controller (UFCT, top centre), VRSG's simulated sensor feed (top right) with an AN/DAS-1 overlay (slewed via one of the HOTAS throttle controls) along with MACE's artificial horizon, altimeter and HSI (bottom left), moving map (bottom centre) and radar warning receiver (bottom right). The UFCD interfaces with the MFD to enable input of mission essential data and control of DIS radios. (Image: MVRSimulation)

Overall, the suite of tools in MACE enables multi-mission virtual role-playing in all warfare domains. In the context of the PTMT and air combat, MACE enables role-specific tactical displays that are integrated with the HOTAS controls and emulate real-world tactical systems.

This coupling of MACE with VRSG provides the degree of immersion ideally suited to training, from solo part-task mission objectives to large-scale, distributed live-virtual-constructive (LVC) rehearsal of major combat operations.

Pinson also noted that using the PTMT it is possible to run recorded data from live missions to enable trainees to replay the action and identify areas for improvement. He suggested this could also be useful for training instructors to analyse trainee performance and increase awareness of actions to monitor. The added capabilities of the XR-3 serve to enhance hard-to-teach concepts, like high angle-off-boresight sight pictures in a basic fighter manoeuvring (BFM) scenario.

NATO customer

The first customer for the PTMT is the NATO Tactical Leadership Programme (TLP), which purchased 30 of the trainers as COTS products and 54 VRSG licences plus 39 MACE licences in 2020. These were delivered and installed in August 2021 and entered service the following month.

MVR also built a 3D terrain of the entire country of Spain at no additional cost, built using 0.50 metres per pixel (mpp) resolution source imagery and SRTM1 (30m) elevation source data. The terrain includes a high-resolution modelled inset of the Lugo province, Leon province, Los Llanos Albacete Air Base (LEAB) where the TLP is based, and the town of La Union, located in Murcia province.

The multinational TLP is the principal centre for NATO air forces tactical training and development of knowledge and leadership skills.

TLP runs four flight training course cycles per year, each for three weeks, with 12 missions. Courses include the Composite Air Operations (COMAO) Flying Course, which aims to improve the tactical leadership skills and flying capabilities of front-line fighter mission commanders, to improve the tactical interoperability of NATO air forces through exposure to tactics and capabilities of other air arms and to provide a flying laboratory for tactical employment concepts.

Taught in between these flying course cycles are academic curricula in disciplines including the COMAO Synthetic Course, a nine-day session focused on tactical leadership and COMAO mission planning. Both COMAO courses include classroom training on the PTMTs.

Observing that ‘probably nothing compares to TLP as a peer-on-peer major tactical facility’, Smith explained that the project was an example of MVR’s preferred business model.

Above: The NATO Tactical Leadership Programme has a total of 30 PTST devices, using a curved screen rather than an MR headset. (Photo: NATO TLP)

‘BSI and MVR engaged with TLP representatives at the start and then developed the PTMT as an internal programme, beginning with a plywood prototype. Working closely with the TLP and other subject matter experts throughout the process meant that we were able to develop a fully COTS system that met their needs while being flexible enough to appeal to other users looking for off-the-shelf solutions,’ he added.

Smith suggested that this is a very cost-effective and low-risk route for the customer, as it avoids lengthy programme of record style acquisitions, and puts the onus on the developer to keep the solution current and relevant to customer requirements as these evolve over time.

The Varjo HMD was not adopted for the TLP installation, Smith said, as the installation took place before the advent of the high-fidelity XR-3 FE. The PTMTs installed at Los Llanos use curved screens.

Matthias Eichner, of the TLP Synthetics Section, said that the PTMTs are ‘a great tool to enhance the participants' immersion into the simulation. The immersion happens by keeping their aural and visual senses focused on the mission, which works quite well. VRSG presents a simulated world that is close enough to the real world, so the participants can believe that they are actually flying.’

‘We use [the PTMTs] as generic cockpits for the aircrew participants of the COMAO Synthetic Course and the COMAO Flying Course,’ he said, ‘in order to provide them with the ability to test their tactical plan versus virtual and constructive Red assets.’

He said that MACE and its plugins had been developed over the last two years to make controlling the simulation easier, to facilitate a more comprehensive way of running several Red assets from one console, and to provide C2 participants with more tools to communicate with the aircrew participants.

He noted that ‘using MACE to build the simulation framework enables us to connect the sim network to a Link 16 access point and have MACE be a bridge between the two, so any live players can see the simulated players in their Link 16 picture, and vice versa. MACE can turn the Link 16 tracks into MACE platforms, so the corresponding models appear in VRSG as well.’

He added: ‘We can use this LVC capability to add synthetic players controlled from the PTMTs to a live mission and use them as force multipliers. It would also allow us to participate in distributed simulations with other simulation facilities.’

Other customers

Smith said that MVR is now marketing the PTMT as a COTS product with the XR-3 as standard, for example as an optional extra with the container-based version of its joint terminal attack controller (JTAC) system, the Deployable Joint Fires Trainer (DJFT).

He said the portability, simplicity and customisable nature of the PTMT were particular attractions for customers: ‘it’s robust, light and is quick to set up’. The large-scale terrain database and the system’s interoperability for distributed training are also advantages, he added. He observed that there was significant interest from US forces in Asia.

Smith also noted that in a collaboration with ZedaSoft at the US Air Force Academy’s (USAFA) Multi-Domain Laboratory, which was opened in 2021, 100 VRSG licenses are being used to provide out-the-window and radar views in flight simulators and sensor views in simulated remotely piloted aircraft (RPA) ground control stations. ZedaSoft provides the flight simulation model.

Each of the laboratory’s two flight bays contains a suite of 12 networked ZedaSoft Zuse reconfigurable flight simulators with a three-monitor out-of-the-window configuration, featuring VRSG visuals and 3D content, and an RPA control room housing three ZedaSoft Mockingbird RPA ground station simulators. For the latter, VRSG provides pilot and sensor operator EO/IR and synthetic aperture radar (SAR) views.

Smith said that the Academy’s use of VRSG to train future USAF pilots at the beginning of their career is reflective of the system’s increasing integration into the wider air force training infrastructure. It will be a major advantage for these cadets when they continue using VRSG on more sophisticated applications such as the MJAT training system for the MQ-9 Predator.

Future possibilities

In addition to providing a PTT for tactical flying training, Smith suggested that there could be significant possibilities in other domains with similar requirements, particularly with the immersive capabilities of the Varjo HMD.

‘In addition to allowing users to exploit the full capabilities of VRSG, the fidelity of the Varjo XR-3 has opened the door to countless future possibilities for mixed-reality training,’ he said. ‘We see many potential applications that would benefit from our, BSI and Varjos’ mix of technologies and our unique approach to serving users in this market space.’