REPORT HOME > ground work

JANUARY 2024 | technology report | military Training

Interactive environments built around multiple leading-edge simulation hardware and software systems are opening up new possibilities for collaborative mission rehearsal and wider training applications.

Above: The view from within a Varjo headset of real-time vehicle entities in VRSG’s Yuma terrain in a Sand Table training scenario. (Image: MVRsimulation)

The truth of the adage that ‘a picture paints a thousand words’ is demonstrated many times a day in a multitude of different situations. This is certainly true of any military training or briefing process: images can get the point across far more effectively than words.

But while a flat, two-dimensional picture provides illustration, how much more can a three-dimensional (3D), immersive experience offer, where the scene can be viewed from multiple angles and points of view?

Models have been used for mission briefing and rehearsal for decades, ranging from detailed target replicas, through large-scale floor models, to the ad-hoc constructs created in the field by tactical commanders from squad level upwards to support the delivery of operational orders.

Indeed, this author can remember from a previous existence both constructing such models and evaluating those put together by students on training courses.

One generic name for models like this is the ‘sand table’, from the material used in some cases as the construction base. Sand is easily moulded into the necessary physical contours of the terrain in question, and the name has come to be used to refer to all models and environments used for mission briefing and rehearsal.

Modern technology now enables such models to be created in the virtual world, and one example is the mixed-reality (XR) Sand Table developed by MVRsimulation which was launched at the 2023 I/ITSEC event in Orlando in November.

Lines in the sand

MVRsimulation's Sand Table is a collaborative visualisation tool designed to deliver real-world networked or standalone military mission planning and after-action review (AAR) in three dimensions via integration with the Varjo mixed-reality headset. It features geospecific terrain, real-time models and culture rendered by MVRsimulation’s Virtual Reality Scene Generator (VRSG) synthetic environment in its round-earth 3D format.

VRSG is a Microsoft DirectX-based render engine. It is a Distributed Interactive Simulation (DIS)-based application and fully interoperable with other compliant software through DIS or the Common Image Generator Interface (CIGI).

According to Garth Smith, MVRsimulation’s President and Co-Founder, VRSG's whole-world terrain database, complete with high-resolution insets, and continously-updated model library is a large part of what sets MVRsimulation’s Sand Table apart.

“A virtual or mixed-reality sand table is only as good as the underlying imagery it uses, and the ability of the image generator to compile new imagery into the terrain," he said. "Our Terrain Tools application can compile new imagery and run it in real time in mixed-reality – all without needing to re-start VRSG.”

At I/ITSEC 2023 the Sand Table was launched with a demonstration that used VRSG’s geospecific 2cm per pixel inset terrain of Yuma Proving Ground, AZ. This terrain was collected by an MVRsimulation-owned drone and built as a high-resolution inset for training scenarios including joint air/land and special operations; however, users can collect imagery with their own collection assets and compile it into VRSG terrain using Terrain Tools.

“For a training scenario where changes to the terrain are critical, such as battle damage assessment, our Sand Table running VRSG is a major differentiator, providing astonishing resolution for all participants within the scenario whether they are experiencing the terrain from ground level at walking pace, or from an aircraft at altitude.”

MVRsimulation already uses VRSG to provide the synthetic environment in its XR joint terminal attack controller (JTAC) training system, the accredited Deployable Joint Fires Trainer (DJFT), and in its fixed-wing Part Task Mission Trainer (PTMT) flight simulator. Both of these, which can be networked together to provide a single multi-role player realistic mission scenario, also employ Varjo headsets.

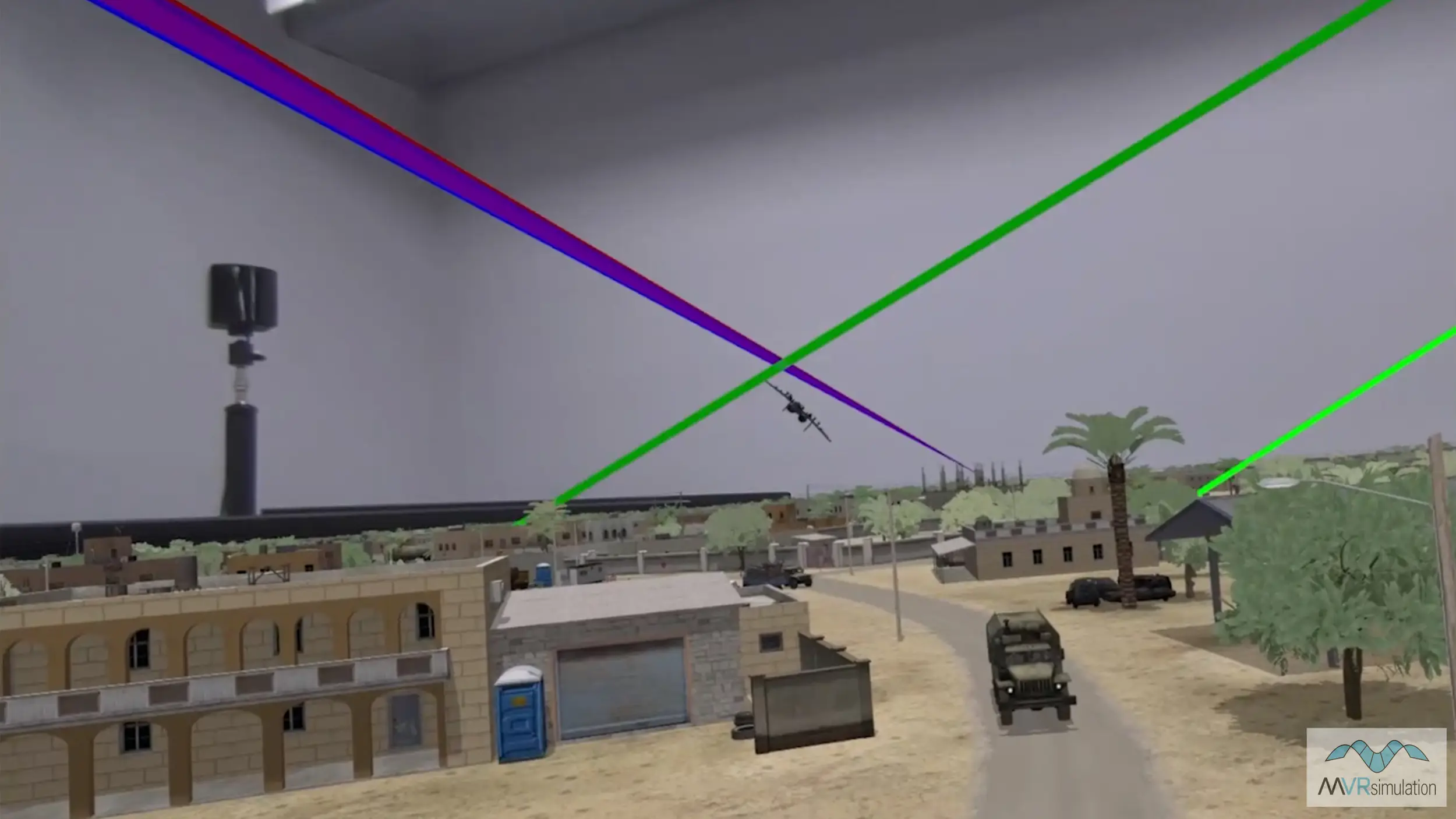

Above: The student’s zoomed-in view inside the Varjo headset of a virtual Sand Table scene displaying DIS-based entities, showing the JTAC trainee’s eye gaze (red and blue cones) and instructor’s view (green frustum) with real-world view. (Image: MVRsimulation)

When the Varjo headset is employed with the Sand Table, users are immersed in an XR environment that allows them to explore the visualisation of the scenario and terrain.

It enables them to understand how their plans will be affected by the terrain and physical features of the landscape, such as mountains, valleys, vegetation and waterways, as well as cultural and man-made features such as buildings, dense urban areas, roads, bridges, dams or other infrastructure.

Users can plan, enact, and review missions and training scenarios while directly interacting with objects in VRSG or by using any DIS-based semi-automated forces (SAF) commercial or government off-the-shelf software.

VRSG listens for DIS entities on the network and displays them as 3D models in the Sand Table. DIS capabilities facilitate recording and playback of training sessions.

Additionally, depending on the SAF software used, VRSG renders in 3D various processes carried out by the SAF. For example, when Battlespace Simulations’ MACE is installed on the same machine as VRSG, MACE can use shared memory between the two processes to pass early warning beam information via its Beam Viewer Control plugin. This includes processes such as radio, acquisition, acquisition-track, target tracking, missile guidance, illuminator, standoff jammer and self-protection jammer, among others.

Flexible capability

MVRsimulation has identified four distinct applications for the virtual Sand Table. The first of these is training mission planning/rehearsal. Prior to conducting a mission using simulators such as the DJFT or PTMT, trainees can join their colleagues and instructor to plan the mission, watch a run-through of the scenario, and make adjustments as required.

Secondly, standalone classroom training is supported. In classroom settings, the Sand Table can provide a learning tool for students and instructors to engage in mission planning and rehearsal to train for tactical decision-making.

Students can see the results of their decisions in real time and gain immediate feedback from the instructor. Other trainees in the room can watch the view from the headsets projected onto screens while instructor and student discuss tactics.

Following a mission, the Sand Table also delivers AAR. Once missions are completed in networked simulators, students and instructors can review a DIS stream playback, allowing them to experience the scenario from all angles, review critical outcomes and deepen learning opportunities.

Above: An instructor view of 3D virtual terrain. The student is seen here in the real-world view just beyond the edge of the virtual Sand Table with correlated blue frustum displaying their current view. (Image: MVRsimulation)

AAR of the trainee’s recorded eye-gaze log also allows students and instructors to understand where the trainee was looking throughout the mission, giving insight on whether critical cues or incidents were missed.

Finally, the Sand Table offers a real-world mission planning tool. As an XR replacement for current operational methods, the system allows users to rehearse actual mission plans, giving all parties a clear understanding of how to complete the task most effectively.

When connected with a DIS-based SAF, users can also implement the mission following their planning to evaluate the success of its objectives. Underpinning this, users of VRSG have access to its worldwide geospecific terrain database (including high-resolution insets), as well as the ability to build their own digital replica of real locations from source imagery.

The headset connection

As in other MVRsimulation simulators, the XR capability of the Varjo headset provides real-world pass-through for direct interaction with other observers. Users can operate physical emulated military equipment, and GOTS military hardware such as the ATAK tactical display via the Special Warfare Assault Kit (SWAK) with imagery correlated to VRSG terrain, without the need to remove the headset – maximising immersion in the virtual world and suspension of disbelief.

Users wearing the headset can also see past the ‘edge’ of the Sand Table into the real world and interact or communicate with other participants or students watching the exercise on external screens.

The eye tracking capability of the headset makes the gaze of the exercise participants, rendered by VRSG, visible to observers as a coloured frustum (a pyramidical beam) throughout the event. The frustum is valuable for training as it allows an instructor to verify in real time if the student is looking at the correct location or entity.

Other Varjo users connected to the DIS-based network can also output their eye gaze tracking into VRSG. This tracking from role-players in AAR is critical to remove ambiguity from the question of where a student’s attention was focused throughout the mission.

Above: Users are immersed in the virtual Sand Table via Varjo XR headsets. They can interact with physical devices in the real world as well as read and write without the need to remove the headset. (Image: MVRsimulation)

Each user has a valve index controller to control their own unique view within the Sand Table, and to zoom in or out for close-up evaluation of a single entity, or to a top-down view of the entire battlefield. The controllers also provide a virtual pointer used to highlight specific elements and direct other participants’ attention to a particular location or entity.

Demonstration scenario

For the launch demonstration at I/ITSEC the Sand Table was networked with the DJFT and PTMT simulators. Participants conducted JTAC/CAS/FO mission planning in the 2cm Yuma terrain, and then watched the mission unfold in real time while it was carried out by JTAC trainees using DJFT. An AAR was then conducted enabled by VRSG’s DIS recording and playback capabilities.

The demonstration scenario included a behind-lines JTAC call-for-fire on a group of military vehicles, missile launchers and radar preparing to launch short-range ballistic missiles. The scenario mimicked a recent real-world event in Western Iraq that saw militia fighters fire on US troops.

The demonstration used new 3D real-time VRSG vehicle model entities that replicate the platforms used in the actual event, with detailed geometry and accurate paint schemes, giving users the ability to experience the training effect delivered by models of currently deployed platforms.

According to Smith, the system received significant interest at I/ITSEC from its potential customer base. The Sand Table has been developed over the past year as a private venture, although the initiative was as a result of requests from the JTAC training community.

Smith said that the system was now fully developed and functional but could be enhanced with specific customer requirements.

This private venture business model is MVRsimulation’s preferred route for development. ‘We’re all self-funded,’ Smith said, ‘because we don’t wish to be beholden to any particular development contract.’ He added that ‘the development of the Sand Table was quite straightforward and a logical next step in terms of our technology road map’.

Above: VRSG real-time model entities representing in-service weapons and radar seen here in a Sand Table training scenario. (Image: MVRsimulation)

Smith highlighted the Sand Table’s lightweight nature and potential for forward deployment, observing that ‘in its standalone mode it’s a relatively lightweight system – we are working toward a two-headset containerised Sand Table in Q1 2024, that will be highly deployable for field operations’.

Addressing the system’s overall capabilities and utility, Smith added: ‘The ability to carry out this training in VRSG’s geospecific terrain, using real-world locations and models based on actual vehicles, weapons and radar equipment being deployed by military forces and insurgents in current conflicts, creates an extremely effective and adaptable training tool to help prepare military personnel for the challenges they face in theatre.’

The effect of the networked demonstration on the MVRsimulation booth at I/ITSEC is that the Sand Table adds a new solution to the company’s mixed-reality military training portfolio.

‘Everything we showed at I/ITSEC demonstrates not just the advanced XR training capabilities of our simulator hardware, but the power of VRSG to bring everything together to deliver game-changing XR training for military users,’ Smith said.

‘The Sand Table demonstration itself pulled in our 2cm Yuma terrain, brand new real-time military vehicle models pulled from newspaper headlines, and the ability to experience training scenarios being carried out in the DJFT and PTMT in a 3D virtual world – all of which is, we believe, a unique capability for the military training community.’